Artificial intelligence (AI) has been making great strides

in recent years, and one of its most fascinating applications is in the field

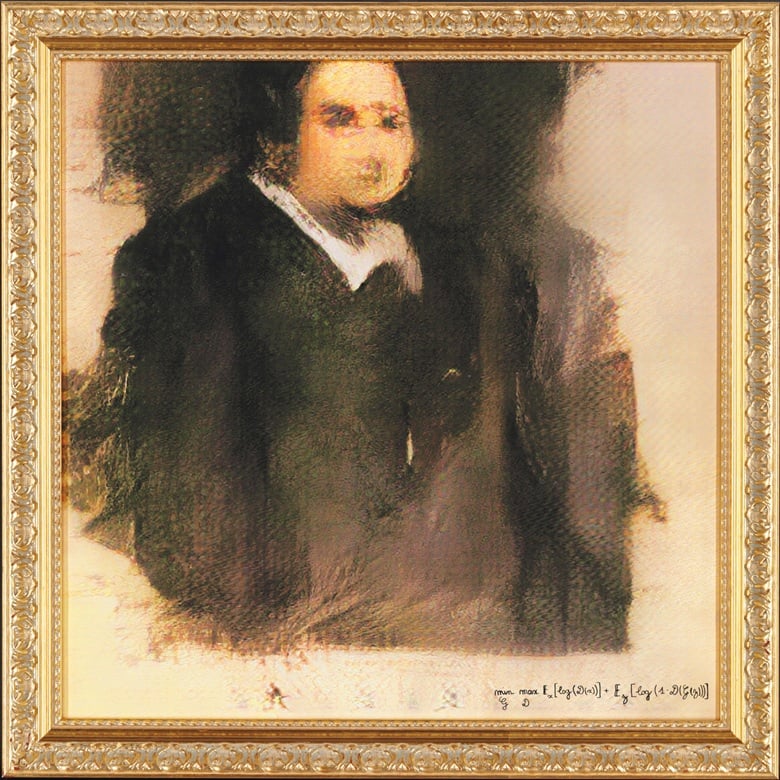

of art. (AIart) With the help of generative adversarial networks (GANs), we are

able to create art that is completely unique and unlike anything that has been

seen before.

Most earlier neural networks used a general approach of

starting with random noise. Early attempts, such as Portrait of Edmond de

Belamy (2018), fetched a whopping US$ 350,000 at auction. However, these early

attempts were crude and took numerous iterations. This approach uses two

networks: the first is trained on images, called the generator, and the second

scores how plausible the result of the iteration is, known as the

discriminator. This approach is usually referred to as GAN (Generative

Adversarial).

The generator's job is to create images from random noise

that look as if they could have been real. The discriminator's job is to

differentiate between the generated images and the real ones. The two networks are

trained together in a feedback loop, with the generator trying to improve its

results and the discriminator trying to get better at identifying the generated

images.

A slightly simpler approach to creating

art with neural networks is to build a smaller network based on a single image

or a small number of images from a specific artist. This approach is simpler

than starting with random noise and can produce stunning results.

Back in 2015, German researchers from the University of Tubingen discovered that the earlier nodes of neural networks

trained on images looked mainly at basic picture elements such as line types,

shapes, directions, tones, and colour. These elements collectively made a good

representation of "style". This means that by training a smaller

neural network on a specific artist's style, we can use an existing image as

the starting point to generate new artwork in the style of the artist works use.

Google's Deep Dream Generator was an early adopter of this approach and offered a Deep Style tab to

perform this. Many other systems now exist that promise to interpret images,

like your selfie, in the style of famous artists, for example.

In this example, I’m using a simple black and white pen sketch

of my own, to control the line work but samples the original image colour. Its

about what I expected and not exciting, I did however like the unexpected suggestion

of a face showing up in the flower on the left.

|

| Created with Google Deep Dream Generator |

Style Transfer is available at

Night Café, and in these example I used it for two different style reference images. The first, I'm starting with a different flower photo and again using my tree sketch, only this I'm using the option to make a short movie of the iteration involved in "finding" the resulting image.

In my last example I'm using a lesser-known Vincent Van Gogh's still life (why do so many start with Vincent’s work in their exploration of AIart?)

This time the results are stronger and closer to something that might be considered good more spontaneous art. But still not really impressive.

In my next post I will look at trying to get a better colour likeness in the result.